The rendering of transparent objects is not an easy task when using z-buffering. The reason is that the way z-buffering works requires to draw all transparent objects from back to front. However, this is not always possible since, for example, two triangles (or objects) could intersect each other, making it impossible to tell which one is in front of the other. To solve this problem, you need an algorithm which is order independent, hence the name order independent transparency. There are already a few algorithms for this: Using an A-Buffer, Depth Peeling , Screen-Door-Transparency and Stochastic Transparency, which I implemented as a test for another demo.

Stochastic Transparency is related to Screen-Door-Transparency, which creates transparency by simply omitting individual (sub-)pixels of transparent surfaces. For example, for a surface with 50% transparency you would only draw every second pixel of the surface. This can be done with OpenGL or any other API by using the “discard” keyword. Stochastic Transparency does the same, it just renders the scene multiple times with random samples and accumulates the rendered images. The advantage over Depth Peeling is that you need less samples for the image. The disadvantage is that since Stochastic Transparency is a Monte-Carlo-Algorithm, it produces much noise for a low number of samples. For my purposes it is better suited than Depth Peeling, so I gave it a try.

The Implementation was straightforward for the most parts. To accumulate the rendered images, at least two framebuffers are needed, since it is not possible to read and write to the same texture in the same pass. The two framebuffers are initialized with all pixels being black. In a loop, the scene is rendered to the first framebuffer with the texture of the second framebuffer added and after that the framebuffers are switched for the next loop iteration. This technique is also known as framebuffer ping-ponging. The number of loop iterations is equal to the number of samples per pixel.

To determine if a pixel is discarded, a random number is compared to the alpha value of the transparent surface. If it is smaller than the alpha value, the pixel is discarded. In more theoretic words, the alpha value sets the probability wether the pixel is drawn or not. This only works for uniform random numbers. My first idea was to use a screen space noise texture for the noise samples. However, this won’t work since the same samples would be used for objects at different depths. The solution is a small 3d noise texture which is tiled many times across the scene, giving each point in the scene a relatively unique sample value. This works well enough for me, although there may be better ways.

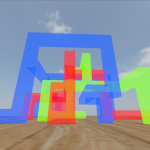

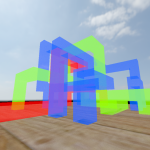

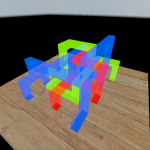

And here are the results:

I used 216 samples for the images, most of the noise is gone but it the framerate was a bit too low for everyday use. However, even with less samples the noise isn’t very distractive and the paper linked above also shows some other ways to reduce the noise.